AI NOW ORGANIZATION adalah organisasi yang mempelajari dan mengembangkan AI (Artificial Intelligence) yang terdiri dari berbagai ahli baik dari kalangan akademisi dan juga praktisi baik dari Google atau Microsoft.

Para ahli ini berkumpul dan mengembangkan bersama-sama demi kemaslahatan umat manusia bersama.

Anda bisa juga bergabung kesana karena organisasi ini terbuka dan memerlukan masukan dari semua pihak termasuk pengguna. Ini kesempatan anda menyematkan dunia, bisa anda mulai dengan mempelajari bentuk atau anatomi lengkap dari AI dan anda juga bisa mengikuti setiap publikasi yang dibuat oleh organisasi ini.

Dalam laporan terakhir yang dibuat organisasi ini, tercatat adanya kekhawatiran karena berbagai organisasi baik pemerintah atau swasta sekarang sudah mengembangakan dan menggunakan AI dengan sangat pesat tetapi kurang keterbukaan.

Sebagai contoh hal ini mudah terlihat dari kasus Facebook, bisa jadi AI hanya terkait orang yang setuju untuk terbuka terkait algoritma FB, ternyata beberapa orang diluar rantai pertemanan juga bisa dilakukan tracing data dan posisi orang tersebut melalui FB. Orang banyak tidak sadar bahwa AI sudah merambah dan tidak ada keterbukaan.

Orang-orang ahli AI ini sangat peduli dan merasa perlunya aturan dan keterlibatan pemerintah untuk mengatur, dari sisi lain para ahli ini juga merasa agar ada perlindungan bagi individu atau perusahaan yang merasa perlu berbagai dengan pihak lain jika diperlukan.

Terkait kasus penggunaan data pengguna, para pengiklan dan pemegang produk harus terbuka juga menjelaskan kepada pengguna apa dampak dari algoritma mereka, hal ini juga menjadi sorotan dari para ahli AI.

AI juga bisa dibuat dengan kondisi yang menarik bagi suatu pihak tetapi bisa jadi merugikan pihak lain, para ahli AI juga meminta pemerintah agar mengontrol agar ada keterbukaan dan keadilan.

Recommendations (dari kelompok AI Now):

1. Governments need to regulate AI by expanding the powers of sector-specific agencies to oversee, audit, and monitor these technologies by domain.

The implementation of AI systems is expanding rapidly, without adequate governance, oversight, or accountability regimes. Domains like health, education, criminal justice, and welfare all have their own histories, regulatory frameworks, and hazards. ...

2. Facial recognition and affect recognition need stringent regulation to protect the public interest.

Such regulation should include national laws that require strong oversight, clear limitations, and public transparency. Communities should have the right to reject the application of these technologies in both public and private contexts. ......

3. The AI industry urgently needs new approaches to governance.

As this report demonstrates, internal governance structures at most technology companies are failing to ensure accountability for AI systems. Government regulation is an important component, but leading companies in the AI industry also need internal accountability structures that go beyond ethics guidelines. .....

4. AI companies should waive trade secrecy and other legal claims that stand in the way of accountability in the public sector.

Vendors and developers who create AI and automated decision systems for use in government should agree to waive any trade secrecy or other legal claim that inhibits full auditing and understanding of their software. ....

5. Technology companies should provide protections for conscientious objectors, employee organizing, and ethical whistleblowers.

Organizing and resistance by technology workers has emerged as a force for accountability and ethical decision making. Technology companies need to protect workers’ ability to organize, whistleblow, and make ethical choices about what projects they work on. .....

6. Consumer protection agencies should apply “truth-in-advertising” laws to AI products and services.

The hype around AI is only growing, leading to widening gaps between marketing promises and actual product performance. With these gaps come increasing risks to both individuals and commercial customers, often with grave consequences. .....

7. Technology companies must go beyond the “pipeline model” and commit to addressing the practices of exclusion and discrimination in their workplaces.

Technology companies and the AI field as a whole have focused on the “pipeline model,” looking to train and hire more diverse employees. While this is important, it overlooks what happens once people are hired into workplaces that exclude, harass, or systemically undervalue people on the basis of gender, race, sexuality, or disability. .....

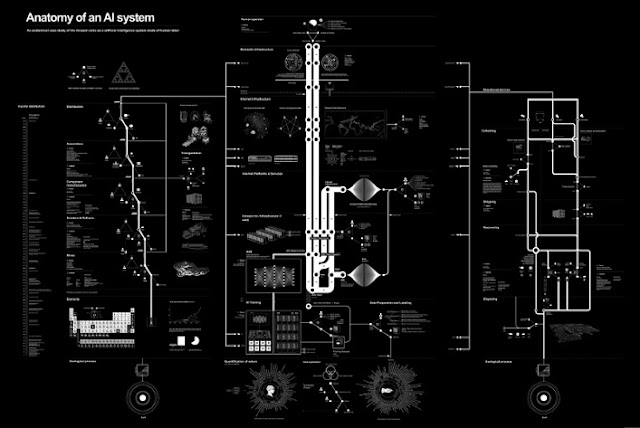

8. Fairness, accountability, and transparency in AI require a detailed account of the “full stack supply chain.”

For meaningful accountability, we need to better understand and track the component parts of an AI system and the full supply chain on which it relies: that means accounting for the origins and use of training data, test data, models, application program interfaces (APIs), and other infrastructural components over a product life cycle. We call this accounting for the “full stack supply chain” of AI systems, and it is a necessary condition for a more responsible form of auditing. ......

9. More funding and support are needed for litigation, labor organizing, and community participation on AI accountability issues.

The people most at risk of harm from AI systems are often those least able to contest the outcomes. We need increased support for robust mechanisms of legal redress and civic participation. .....

10. University AI programs should expand beyond computer science and engineering disciplines.

AI began as an interdisciplinary field, but over the decades has narrowed to become a technical discipline. With the increasing application of AI systems to social domains, it needs to expand its disciplinary orientation. .....